In the era of data-driven decision-making, organisations are constantly seeking innovative ways to manage, analyse, and derive insights from their vast data resources. Traditionally, on-premise Big Data tools have been the go-to solution for handling large volumes of data. However, these tools come with their own set of limitations, often hindering organisations from fully capitalising on the potential of their data assets. In contrast, cloud-based Big Data tools offer unparalleled flexibility, scalability, and cost-effectiveness, revolutionising the way companies approach data engineering.

Limitations of On-Premise Big Data Tools:

- Scalability Constraints: On-premise Big Data tools often face scalability challenges, as organisations must invest in physical infrastructure upfront. Scaling up to meet growing data demands requires additional hardware investments, leading to over-provisioning or undervaluation of resources.

- High Maintenance Costs: Maintaining on-premise infrastructure incurs significant costs, including hardware procurement, software licenses, and ongoing maintenance. Additionally, organisations must allocate resources for infrastructure management, upgrades, and troubleshooting, further increasing operational expenses.

- Limited Agility: On-premise Big Data tools lack the agility to adapt to changing business needs and evolving data requirements. Deploying new technologies or scaling infrastructure to meet fluctuating workloads often involves time-consuming processes and delays, hindering innovation and competitiveness.

- Complexity: Managing on-premise Big Data tools can be complex and resource-intensive, requiring specialised skills and expertise. Integrating disparate systems, ensuring interoperability, and optimising performance require dedicated teams and resources, adding complexity to data engineering workflows.

Advantages of Cloud-Based Big Data Tools:

- Scalability: Cloud-based Big Data tools offer virtually unlimited scalability, allowing organisations to scale resources up or down based on demand. With on-demand provisioning and pay-as-you-go pricing models, organisations can optimise costs and avoid over provisioning or undervaluation of resources.

- Cost-Effectiveness: Cloud platforms eliminate the need for upfront capital expenditure on hardware infrastructure, software licenses, and maintenance. Organisations can leverage cloud-based services and pay only for the resources they consume, resulting in significant cost savings and improved cost predictability.

- Flexibility and Agility: Cloud-based Big Data tools provide unparalleled flexibility and agility, enabling organisations to experiment with new technologies, deploy applications quickly, and iterate on solutions rapidly. With cloud-native services and managed offerings, organisations can focus on innovation rather than infrastructure management.

- Integration and Interoperability: Cloud platforms offer seamless integration with a wide range of data sources, applications, and third-party services. Built-in connectors, APIs, and compatibility with industry standards facilitate data integration and interoperability, streamlining data engineering workflows and enabling organisations to derive insights from diverse data sources.

Comprehensive List of On-Premise Big Data Tools and Cloud-Based Alternatives:

- On-Premise Tools:

- Hadoop Distributed File System (HDFS)

- Apache Spark

- Apache Hive

- Apache HBase

- MongoDB

- Cassandra

- Elasticsearch

- Oracle Exadata

- Cloud-Based Alternatives:

- Amazon S3 (Storage)

- Amazon EMR (Hadoop/Spark)

- Amazon Redshift (Data Warehouse)

- Google BigQuery (Data Warehouse)

- Microsoft Azure Data Lake (Storage)

- Microsoft Azure HDInsight (Hadoop/Spark)

- Snowflake (Data Warehouse)

- Databricks (Unified Analytics Platform)

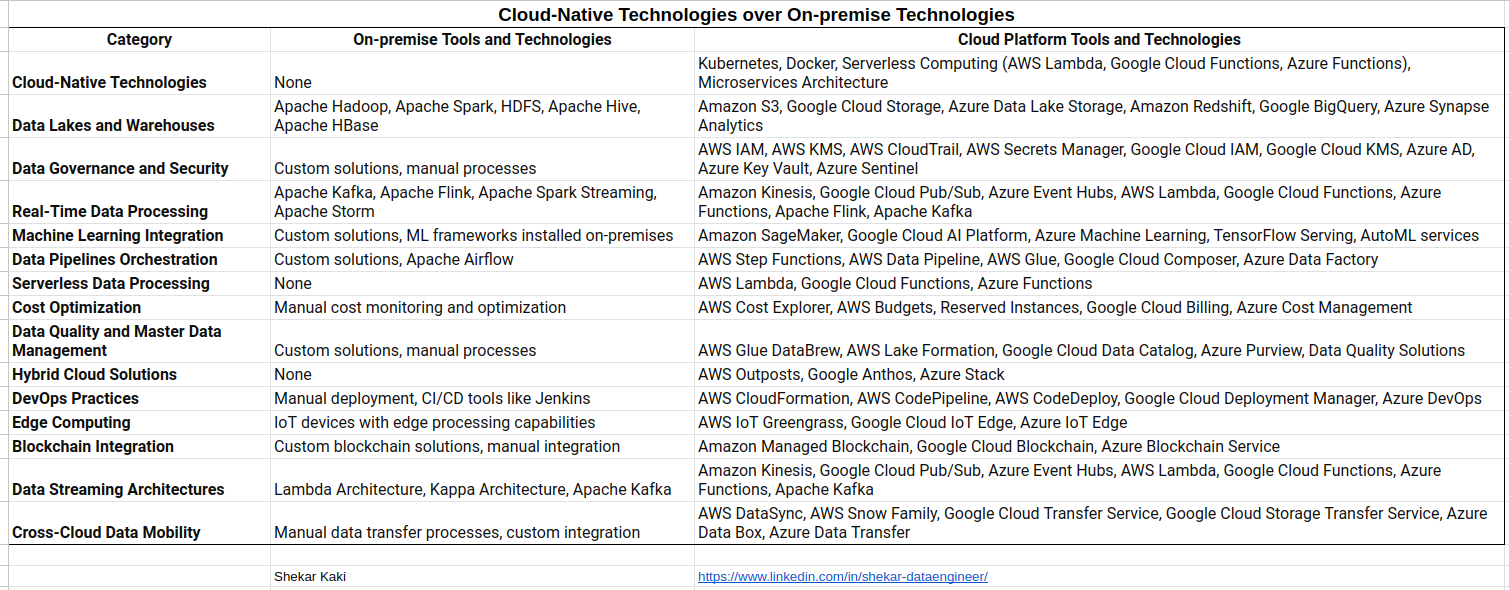

Comprehensive list of Cloud Tools & Technologies :

Case Study:Building a Data Lake on Premise vs. Cloud Lakehouse Architecture.

Consider a scenario where a company aims to build a data lake to consolidate and analyse various data sources, including structured and unstructured data.

- On-Premise Data Lake: Building a data lake on-premise requires provisioning and managing hardware infrastructure, installing and configuring software components, and ensuring data security and governance. The process involves significant upfront investment, ongoing maintenance, and resource allocation for infrastructure management.

- Cloud Lakehouse Architecture: Leveraging cloud platforms such as AWS, Google Cloud, or Microsoft Azure, organisations can build a cloud-based data lake with minimal upfront investment and operational overhead. Cloud-based data lakes offer scalable storage, integrated analytics services, and built-in security features, enabling organisations to ingest, process, and analyse data at scale. With managed services and server-less offerings, organisations can focus on data analysis and insights generation rather than infrastructure management.

Conclusion:

In conclusion, the shift from on-premise Big Data tools to cloud-based alternatives represents a paradigm shift in data engineering practices.

Cloud platforms offer unparalleled scalability, cost-effectiveness, and agility, empowering organisations to unlock the full potential of their data assets. By leveraging cloud-based Big Data tools and services, organisations can overcome the limitations of on-premise infrastructure, accelerate innovation, and drive business growth in the digital age.

LinkedIn Article: https://www.linkedin.com/pulse/unlocking-power-cloud-revolutionizing-data-engineering-shekar-kaki-8cxjc/?trackingId=8BPLQiMsRF6xNMImwnDD8Q%3D%3D